WEKA (Waikato Climate for Information Investigation) is an assortment of AI calculations for information mining errands. It contains devices for information arrangement, order, relapse, bunching, affiliation rules mining, and representation. It likewise upholds Profound Learning.

It is written in Java and created at the College of Waikato, New Zealand. Weka is open source programming delivered under the GNU Overall population Permit.

Weka gives admittance to SQL information bases utilizing Java Data set Availability (JDBC) and permits involving the reaction for a SQL question as the wellspring of information. This instrument doesn’t uphold handling of related outlines; in any case, there are many devices permitting joining separate graphs into a solitary diagram, which can be stacked directly into Weka.

There are two variants of Weka: Weka 3.8 is the most recent stable rendition and Weka 3.9 is the improvement adaptation. For the extreme front line, downloading daily snapshots is additionally conceivable. Stable variants get just bug fixes, while the advancement rendition gets new elements.

UIs:

It has 5 UIs:

1-Straightforward CLI:

Gives full admittance to all Weka classes, i.e., classifiers, channels, clusterers, and so on, however without the problem of the CLASSPATH. It offers a basic Weka shell with isolated commandline and yield.

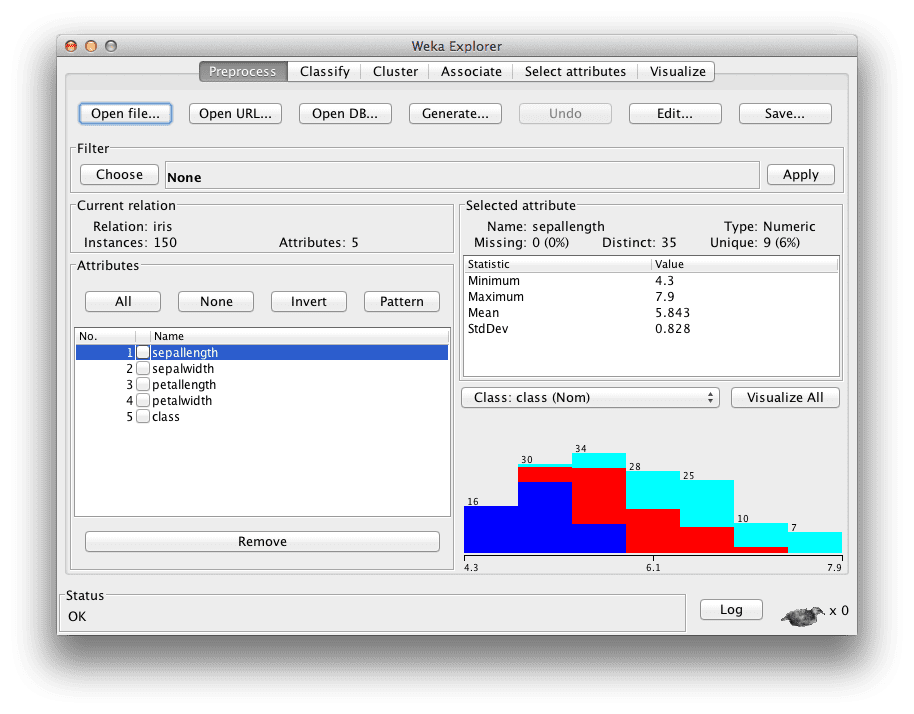

2-Adventurer:

A climate for investigating information with WEKA. It has various tabs:

Preprocess: which empowers you to pick and adjust the information being followed up on.

Group: to prepare and test learning plans that arrange or perform relapse.

Group: to learn bunches for the information.

Partner: to learn affiliation rules for the information.

Select characteristics: to choose the most applicable qualities in the information.

Imagine: which empowers you to see an intelligent 2D plot of the information.

3-Experimenter:

A climate that empowers the client to make, run, change, and examine tests in a more helpful way than is conceivable while handling the plans exclusively

4-KnowledgeFlow:

Upholds basically similar capabilities as the Voyager yet with a simplified connection point. The KnowledgeFlow can deal with information either steadily or in clusters (the Adventurer handles clump information as it were).

5-Workbench:

Another UI which is accessible from Weka 3.8.0. The Workbench gives an across the board application that subsumes all the significant WEKA GUIs portrayed previously.

- Cross-Stage support (Windows, Macintosh operating system X and Linux).

- Free open source.

- Usability (incorporates a GUI).

- An extensive assortment of information preprocessing and demonstrating strategies.

- Upholds Profound Learning.

Bundles:

Weka has countless relapse and order instruments. A few models are:

BayesianLogisticRegression: Executes Bayesian Strategic Relapse for both Gaussian and Laplace Priors.

BayesNet: Bayes Organization picking up utilizing different hunt calculations and quality measures.

GaussianProcesses: Executes Gaussian Cycles for relapse without hyperparameter-tuning.

LinearRegression: Class for involving straight relapse for expectation.

MultilayerPerceptron: A Classifier that utilizes backpropagation to order occasions.

NonNegativeLogisticRegression: Class for learning a calculated relapse model that has non-negative coefficients.

PaceRegression: Class for building pace relapse straight models and involving them for expectation.

SMO: Executes John Platt’s consecutive insignificant streamlining calculation for preparing a help vector classifier.

ADTree: Class for creating a rotating choice tree.

BFTree: Class for building a best-first choice tree classifier.

HoeffdingTree: A Hoeffding tree (VFDT) is a steady, whenever choice tree enlistment calculation that is equipped for gaining from gigantic information streams, expecting that the dispersion producing models doesn’t change over the long run.

M5P: M5Base. Carries out base schedules for creating M5 Model trees and rules.

RandomForest: Class for building a timberland of irregular trees.

RandomTree: Class for building a tree that thinks about K haphazardly picked credits at every hub. Plays out no pruning.

SimpleCart: Class carrying out negligible expense intricacy pruning.

IBk: K-closest neighbors classifier. Can choose proper worth of K in view of cross-approval. Can likewise separate weighting.

IB1: Closest neighbor classifier.

LBR: Sluggish Bayesian Principles Classifier.

DecisionTable: Class for building and utilizing a straightforward choice table larger part classifier.

M5Rules: Creates a choice rundown for relapse issues utilizing independent and-overcome. In every cycle it fabricates a model tree utilizing M5 and makes the “best” leaf into a standard.

ZeroR: Class for building and utilizing a 0-R classifier. Predicts the mean (for a numeric class) or the mode (for an ostensible class).

ClassificationViaRegression: Class for doing arrangement utilizing relapse strategies.

Stacking: Consolidates a few classifiers utilizing the stacking strategy. Can do grouping or relapse.

DataNearBalancedND: A meta classifier for taking care of multi-class datasets with 2-class classifiers by building an irregular information adjusted tree structure.

MDD: Adjusted Different Thickness calculation, with aggregate supposition.

MINND: Different Example Closest Neighbor with Circulation student.